About the Workshop

Generative AI (GenAI) is transforming the landscape of artificial intelligence, not just in scale but in kind. Unlike traditional AI, GenAI introduces unique challenges around interpretability, as its tens of billions of parameters and emergent behaviors demand new ways to understand, debug, and visualize model internals and their responses. At the same time, GenAI enables agentic systems—goal-driven, autonomous agents that can reason, act, and adapt across complex tasks, raising a critical question: Will AI agents eventually replace human data scientists, and if not, how might they best collaborate?

VIS x GenAI Workshop brings together researchers, practitioners, and innovators exploring the intersection of generative AI, autonomous agents, and visualization. We focus on new challenges and opportunities, including interpreting and visualizing various aspects of large-scale foundation models, designing visual tools both for and with agents, and rethinking evaluation, education, and human–AI collaboration in the age of generative intelligence. Our workshop aims to address critical questions: How can visualization techniques evolve to collaborate with AI systems? What novel interfaces will emerge in agent-augmented analytics? How might generative AI reshape visualization authoring and consumption?

Call for Participants

We invite participation through two submission tracks: Short Paper and Mini-Challenge. Both are opportunities to showcase novel ideas and engage with the growing community at the intersection of visualization, generative AI, and agentic systems.

Track A: Short Paper

We invite short paper submissions (2–4 pages excluding references) that explore topics across theory, systems, user studies, and applications for GenAI interpretability and safety, or agentic VIS. Submissions must follow the VGTC conference two-column format, consistent with the IEEE VIS formatting guidelines. Areas of interest include, but are not limited to, the following:

- VIS for interpreting GenAI systems.

▶More information - GenAI interpretability and safety realted works that highlights challenges or opportunities where VIS can fit.

▶More information - Position papers for VIS and GenAI researchers.

▶More information - Agent-augmented VIS tools.

- VIS tools for agents that agents themselves can perceive, reason over, or act upon.

- Methods and benchmarks for assessing agent performance on VIS-related tasks.

- Case studies and demos of agent systems applied to real-world VIS problems.

- Position papers on agents in VIS education, immersive visualizations for embodied agents, or multi-agent coordination in visual reasoning.

Track B: Mini-Challenge

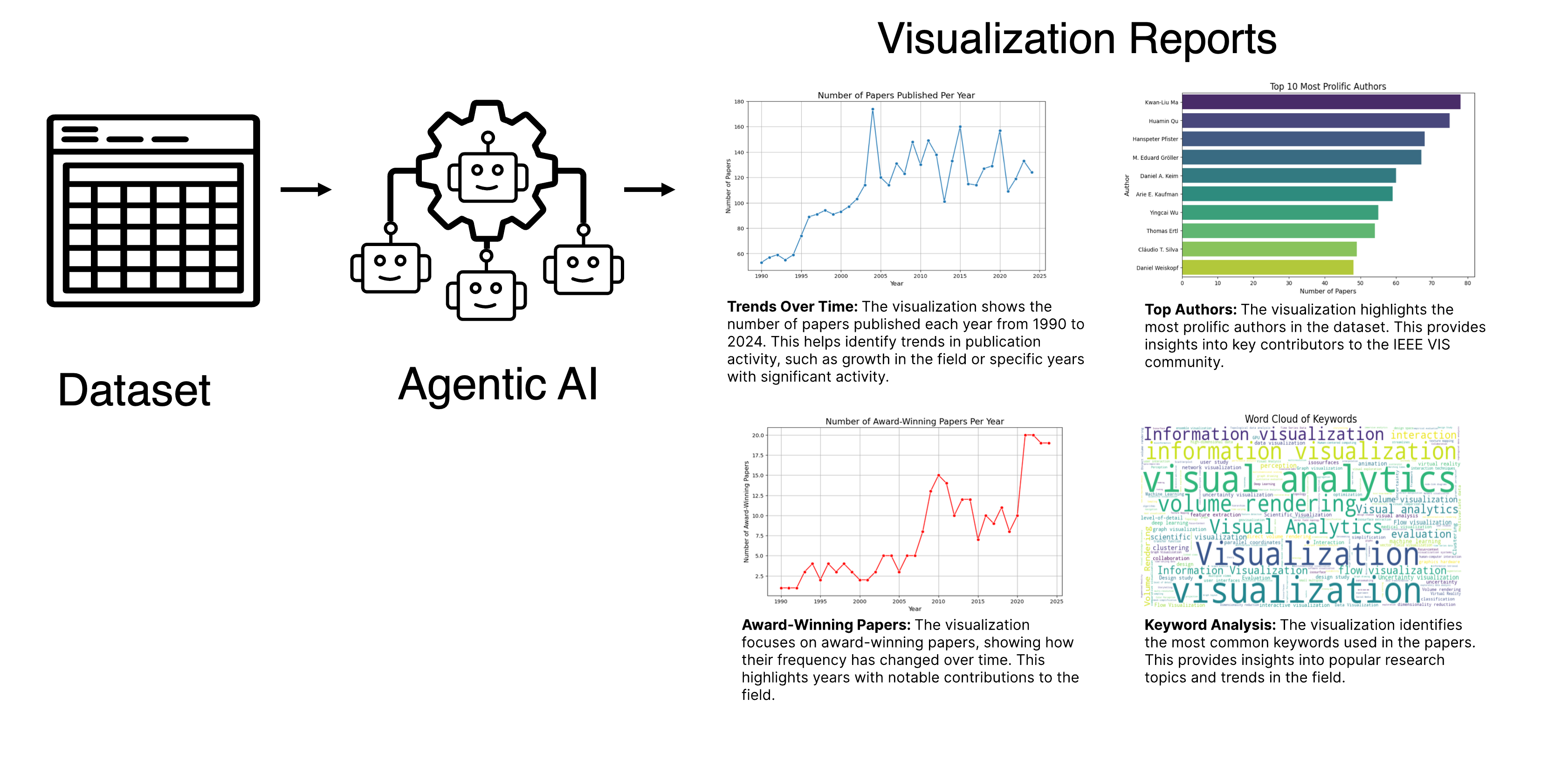

Inspired by challenges like ImageNet in computer vision and HELM in language models, our mini-challenge invites participants to build or adapt AI agents that can automatically analyze datasets and generate visual data reports.

The goal is to benchmark and accelerate progress in agent-based data analysis and communication. We will provide a starter kit and an evaluation server to support participants. During the testing period, you will be able to iteratively improve your agents by submitting them to the evaluation server. By the submission deadline, you will submit a technical report (VGTC short paper format, up to 2 pages via the PCS system) that 1) describes how your best-performing agent was developed and 2) includes a link to its best generated report.

Winning teams will receive cloud credits (sponsored by Amazon), award certificates, and an invitation to present at the workshop:

🥇 1 st Place

(x1 team)

$3,000

in AWS cloud credits

🥈 Runner-up

(x1 team)

$1,500

in AWS cloud credits

🎖 Honorable Mention (x3 teams)

$500 each

in AWS cloud credits

More details, including datasets, templates, and submission instructions, can be found: visagent.org

Submissions—including both papers and challenge reports—must be anonymous and submitted through the PCS system. Each submission will be evaluated by at least two reviewers based on quality and topical relevance. Accepted papers will be invited to present as posters, demos, or lightning talks during the workshop, and will be published on the workshop website. Top-rated challenge participants will receive awards and be invited to present their solutions at the workshop. Please join the Discord for any questions and discussions.

Important Dates

All deadlines are in Anywhere time zone (UTC-12).

- May 30th, 2025: Call for Participation

- August

20th29th (Friday), 2025: Submission Deadline - September

1st10th, 2025: Author Notification - October 1st, 2025: Camera Ready Deadline

- November 3rd (Monday), 2025: Workshop Day

Keynote Speakers

Taimur Rashid

Taimur is an accomplished product and business executive with 20+ years at the intersection of technology, product, and go-to-market strategy. He currently serves as Managing Director of AWS's Generative AI Innovation & Delivery organization, a multi disciplinary team of AI scientists, strategists, and engineers that help organizations worldwide build and adopt end-to-end generative AI and agentic AI solutions.

Prior to re-joining AWS, Taimur served as Executive Vice President of AI at Redis, where he built the AI/ML business from the ground up, leading product strategy, product development, and market introduction, while also contributing to Redis IPO readiness through strategic projects. He also founded Recursion Venture Capital, a boutique venture capital and management consulting firm, wherein he formalized 5+ of angel investing into being a micro-VC, and over a decade worth of GTM experience into a management consulting practice. At Microsoft, as GM and Head of Customer Success and Customer Engineering for Azure Data and AI, he built a top-tier organization focused on helping enterprise organizations with cloud migration, data modernization, and digital transformation for Azure Data & AI.

Taimur is a University of Texas at Austin alumnus with a focus on automata theory and knowledge-based systems. He lives in Bellevue, WA, stays active, paints, coaches youth basketball, and supports local nonprofits.

Andreas Holzinger

Andreas Holzinger pioneered in interactive machine learning with the human-in-the-loop promoting robustness and explainability to foster trustworthy AI. He advocates a synergistic approach of Human-Centered AI (HCAI) to put the human in-control of AI, aligning artificial intelligence with human intelligence, social values, ethical principles, and legal requirements to ensure secure, safe and controllable AI.

Andreas was elected a member of Academia Europaea in 2019, the European Academy of Science, of the European Laboratory for Learning and Intelligent Systems (ELLIS) in 2020, and Fellow of the international federation of information processing (ifip) in 2021. He obtained his Ph.D. from Graz University in 1998, and his Habilitation from Graz University of Technology in 2003. Andreas was Visiting Professor in Verona (Italy), RWTH Aachen (Germany), the UCL (UK), and the University of Alberta (Canada). Since 2021 he is endowed chair for digital transformation in smart farm and forest operations at the Department of Ecosystem Management, Climate and Biodiversity at BOKU University Vienna, and is head of the HCAI Lab Vienna. Andreas serves as consultant for the Canadian, US, UK, Swiss, French, Italian and Dutch governments, the German Excellence Initiative, and as national expert in the European Commission. He is also on the advisory board of the German Government's AI strategy, AI made in Germany 2030.

Outside the lab, Andreas keeps fit by practicing Shotokan Karate (1st Dan).

Schedule

Organizers

- Zhu-Tian ChenUniversity of Minnesota

- Shivam RavalHarvard University

- Enrico BertiniNortheastern University

- Niklas ElmqvistAarhus University

- Nam Wook KimBoston College

- Pranav RajanKTH Royal Institute of Technology

- Renata G. RaidouTU Wien

- Emily ReifGoogle Research & University of Washington

- Olivia SeowHarvard University

- Qianwen WangUniversity of Minnesota

- Yun WangMicrosoft Research

- Catherine YehHarvard University

Challenge Development Team

- Pan HaoUniversity of Minnesota

- Divyanshu TiwariUniversity of Minnesota

- Chia-Lun(James) YangUniversity of Minnesota

- Zhu-Tian ChenUniversity of Minnesota

- Qianwen WangUniversity of Minnesota

Sponsor

Our workshop is sponsored by Amazon.